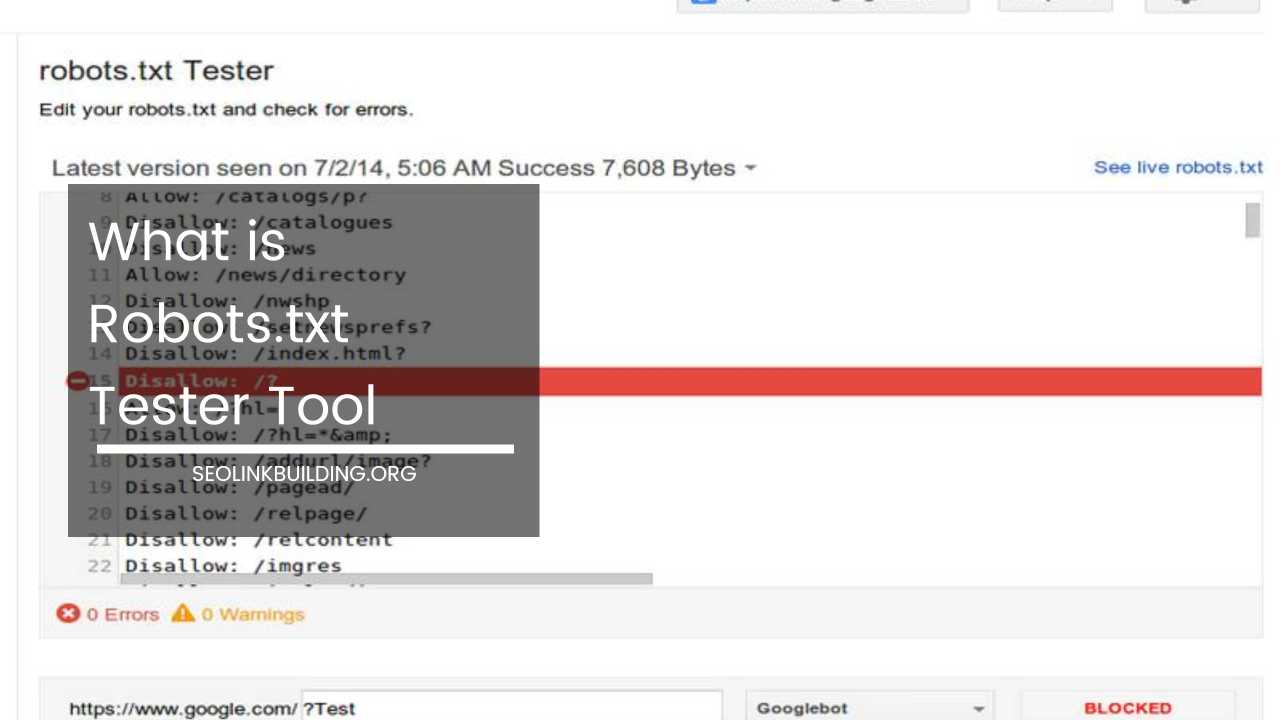

What is Robots.txt Tester Tool

What is a Robots.txt Tester Tool and Why Do You Need One?

In the ever-evolving landscape of Search Engine Optimization (SEO), ensuring your website is seen by the right crawlers remains paramount.

This is where the robots.txt file steps in, acting as a communication bridge between your website and search engine crawlers like Googlebot. But how do you know if your robots.txt file is functioning as intended?

This is where a robots.txt tester tool becomes your indispensable ally.

Demystifying Robots.txt

A robots.txt file is a text file nestled at the root directory of your website. It provides instructions to search engine crawlers on which pages and files they are permitted to access and index.

This empowers you to optimize your website’s crawling efficiency and prevent confidential information or unfinished sections from being indexed.

Here’s a breakdown of the key components of a robots.txt file:

- User-agent directives: These specify which search engine crawlers (identified by their user-agent strings) the instructions apply to. Common examples include “Googlebot” and “Bingbot.”

- Allow directives: These define the URLs or directories that crawlers are permitted to access. This ensures proper crawling of your website’s public content.

- Disallow directives: These specify the URLs or directories that crawlers should not access. This can be used to prevent crawling of sensitive information, login pages, or duplicate content.

- Crawl-delay directive (optional): This sets a suggested delay (in seconds) between requests made by a specific user-agent. This can be helpful for websites with limited server resources.

While robots.txt files offer control over crawling, it’s important to remember that they are just suggestions. Search engines can choose to ignore them, but they generally adhere to these guidelines.

Why Use a Robots.txt Tester Tool?

Now that you understand the importance of a robots.txt file, let’s delve into why a robots.txt tester tool is a crucial addition to your SEO toolbox.

-

Ensuring Accuracy: Manually verifying complex robots.txt configurations can be time-consuming and prone to errors. A robots.txt tester tool automates the process, meticulously analyzing your file’s syntax and identifying any potential issues that might hinder crawling. This saves you valuable time and resources while ensuring your robots.txt file functions as intended.

-

Testing New Rules: Before deploying changes to your live robots.txt file, you can leverage a tester tool to simulate how search engine crawlers would interpret the new directives. This allows you to identify and rectify any unintended consequences before they impact your website’s visibility. For example, you might accidentally block essential content by adding a new disallow directive. A tester tool can help you catch this issue before it goes live.

-

Debugging Blocking Issues: If you suspect your robots.txt file might be blocking important content from search engine crawlers, a tester tool can pinpoint the specific directives causing the problem. This can be invaluable for troubleshooting unexpected crawl behavior. Imagine a scenario where important product pages are not being indexed. A tester tool can help you identify a disallow directive that might be unintentionally blocking these pages.

-

Peace of Mind: With a robots.txt tester tool regularly verifying your file’s functionality, you can rest assured that search engines have proper access to your website’s content. This contributes to a more accurate and efficient crawling process, ultimately impacting your website’s search engine ranking potential.

-

Advanced Features: Many robots.txt tester tools offer additional functionalities beyond basic syntax checking. These features can include:

- User-Agent Simulation: Some tools allow you to simulate crawling from the perspective of different search engine crawlers, providing insights into how each crawler might interpret your robots.txt file.

- Crawl Budget Optimization: These tools can analyze your crawl directives and suggest ways to optimize your crawl budget, ensuring search engines prioritize indexing your most important content.

- Historical Analysis: Advanced tools might offer the ability to track changes made to your robots.txt file over time and analyze their impact on crawling behavior. This historical data can be invaluable for identifying trends and making informed decisions about future modifications.

How Does a Robots.txt Tester Tool Work?

Using a robots.txt tester tool is a straightforward process, typically involving the following steps:

- Locate the Tool: There’s a wealth of free and paid robots.txt tester tools available online. Here are some popular choices:

-

- Google Search Console (Free)

- Ryte Robots.txt validator and testing tool (Free)

- Tame the Bots Robots.txt Testing & Validator Tool (Free)

- Screaming Frog SEO Spider Tool (Paid; Offers robots.txt testing as part of its functionality)

-

Input Your Robots.txt File: Most tools allow you to either:

- Paste the contents of your robots.txt file directly.

- Provide the URL of your live robots.txt file (if publicly accessible).

-

Run the Test: Once you’ve provided the necessary information, initiate the test by clicking a designated button.

-

Analyze the Results: The tool will analyze your robots.txt file and present a detailed report. This report typically includes:

- Syntax Validation: Checks for any errors in the structure and formatting of your robots.txt file. This ensures the file is readable and interpretable by search engine crawlers.

- Directive Breakdown: Provides a clear overview of the allow and disallow directives present in your file, making it easier to understand how your robots.txt file is configured.

- Potential Issues: Highlights any directives that might be causing unintended consequences, such as accidentally blocking important content or creating crawl loops (where a crawler gets stuck endlessly following redirects).

- User-Agent Specific Analysis: If your robots.txt file includes directives for different search engine crawlers, the report will analyze them individually. This helps you ensure consistent crawling behavior across different search engines.

Leveraging Robots.txt Tester Tools for Effective SEO

By incorporating robots.txt tester tools into your SEO workflow, you can achieve the following benefits:

- Improved Crawlability: By ensuring your robots.txt file is accurate and efficient, you allow search engine crawlers to access and index your website’s content more effectively. This can lead to better search engine ranking and increased organic traffic.

- Enhanced SEO Performance: A well-configured robots.txt file can help you optimize your crawl budget, ensuring search engines prioritize indexing your most valuable content. This can lead to a more efficient crawling process and potentially improve your website’s overall SEO performance.

- Reduced Technical SEO Issues: Robots.txt tester tools can help you identify and fix technical SEO issues related to your robots.txt file. This can prevent potential crawling problems that might hinder your website’s search engine visibility.

- Streamlined Website Management: These tools can streamline the process of managing your robots.txt file, especially for websites with complex configurations. They can save you time and resources by automating tasks like syntax checking and identifying potential conflicts.

- Greater Peace of Mind: Knowing your robots.txt file is functioning as intended can provide valuable peace of mind. This allows you to focus on other aspects of your SEO strategy without worrying about unintended consequences from crawl directives.

Final Thoughts

A robots.txt file plays a crucial role in how search engine crawlers interact with your website. By utilizing a robots.txt tester tool, you can ensure your file is accurate, efficient, and doesn’t hinder your website’s SEO performance.

By leveraging these tools effectively, you can optimize your website’s crawlability and pave the way for improved search engine visibility.

Additional Tips:

- Regularly review and update your robots.txt file, especially when making significant changes to your website’s structure or content.

- Consider using a robots.txt generator tool to create a basic structure for your file, especially if you’re new to SEO.

- Don’t rely solely on robots.txt to prevent sensitive information from being indexed. Consider password protection or other methods for truly confidential data.

- Stay updated on the latest best practices for robots.txt file configuration, as search engine guidelines may evolve over time.

By following these tips and incorporating robots.txt tester tools into your SEO strategy, you can ensure your website is effectively crawled and indexed by search engines, ultimately contributing to a more successful online presence.